Dealing with Big Data

By Amber E. Watson

November 15, 2013

http://www.softwaremag.com/content/ContentCT.asp?P=3457

Collecting, mananging, processing, and analyzing big data is critical to business growth.

Big data holds the key to the critical information an organization needs to attract and retain customers, grow revenue, cut costs, and transform business.

According to SAS, big data describes the exponential growth, availability, and use of structured and unstructured information. Big data is growing at a rapid rate, and it comes from sources as diverse as sensors, social media posts, mobile users, advertising, cybersecurity, and medical images.

Organizations must determine the best way to collect massive amounts of data from various sources, but the challenge lies in how that data is managed and analyzed for the best return on investment (ROI). The ultimate goal for organizations is to harness relevant data and use it to make the best decisions for growth.

Technologies today not only support the collection and storage of large amounts of data, but they also provide the ability to understand and take advantage of its full value.

Challenges of Collecting Big Data

A common description of big data involves “the four V’s,” including “the growing volume, which is the scale of the data; velocity—how fast the data is moving; variety—the many sources of the data; and veracity—how accurate and truthful is the data,” explains James Kobielus, big data evangelist, IBM.

Organizations strive to take advantage of big data to better understand the customer experience and behavior, and to tailor content to maximize opportunities.

Steve Wooledge, VP of marketing, Teradata Unified Data Architecture, notes that gaining insights from big, diverse data typically involves four steps including data acquisition, data preparation, analysis, and visualization.

“One of the key challenges in collecting big data is the ability to capture it without too much up-front modeling and preparation,” he says. “This becomes especially important when data comes in at a high velocity and variety due to different types of data—for example, Web logs generated from visitor activity on high-traffic ecommerce Web sites, or text data from social media sites.”

Separate, disconnected silos of data also increase the time and effort required for data analysis, which is why integrated and unified interfaces are preferred.

Webster Mudge, senior director of technology solutions, Cloudera, agrees that the variety of big data presents a particular issue because a disproportionate amount of data growth experienced by organizations is with unstructured or variable structured information, such as sensor and social media content.

“It is difficult for business and IT teams to fully capture these forms of data in traditional systems because they do not typically fit cleanly and efficiently with traditional modeling approaches,” he points out. “Thus, organizations have trouble extracting value from these forms due to model constraints and impedance.”

With a large volume of incoming data, transferring it reliably from sources to destinations over a wide-area network is also challenging. “Once data arrives at the destination, it is often logged or backed up for recoverability. The next step is ingesting large volumes of data in the data warehouse. Because loading tends to be continuous, the biggest challenge is ingesting and concurrently allowing reporting/analytics against the data,” states Jay Desai, co-founder, XtremeData Inc.

Mike Dickey, founder/CEO, Cloudmeter, explains that traditionally, network data was captured with intrusive solutions that modified the source code of Web applications (apps). “Companies either add extra code that runs within Web browsers, known as ‘page tags,’ or modify the server code to generate extra log files,” he notes.

“But both approaches restrict companies to capture only a subset of the information available because the excess code introduces significant latency, harming performance, and degrading customer experiences. It is also a nightmare on the back end for IT to stay on top of dynamic, ever-changing sites.”

To get the best use of one’s data, George Lumpkin, VP of product management, big data and data warehousing, Oracle, advises an organization to first understand what data it needs and how to capture it.

“In some cases, an organization must investigate new technologies like Apache Hadoop or NoSQL databases. In other cases, it may be required to add a new kind of cluster to run the software, increase networking capacity, or increase speed of analytics. If the use case involves real-time responses on streaming data—as opposed to more batch-oriented use—there are tools around event processing, caching, and decision automation,” he says.

These considerations, of course, must be integrated with the existing information infrastructure. “Big data should not exist in a vacuum,” says Lumpkin. “It is the combination of new data and existing enterprise data that yields the best new insight and business value.”

With new technology comes the need for new skills. “There is an assumption that since Hadoop is open source and available for free, somebody should just download it and get started. While the early adopters of Hadoop did take this approach, they have significantly developed their skill sets over time to install, tune, use, update, and support the software internally, efficiently, and effectively,” shares Lumpkin.

The key to realizing value with big data is through analytics, so organizations are advised to invest in new data science skills, adopting and growing statistical analysis capabilities.

Big Data Management

When it comes time to analyze big data, the analytics are only as good as the data. It is important to think carefully about how data is collected and captured ahead of time. Sometimes businesses are left with information that doesn’t correlate, and are unable to pull the analytics needed from the information gathered.

Data preparation and analysis is a major undertaking. “Data acquisition and preparation can itself take up 80 percent of the effort spent on big data if done using suboptimal approaches and manual work,” cautions Teradata’s Wooledge.

“One key challenge of managing data is preparing different types of data for analysis, especially for multistructured data. For example, Web logs must first be parsed and sessionized to identify the actions customers performed and the discrete sessions during which customers interacted with the Web site. Given the volume, variety, and velocity of big data, it is impossible to prepare this data manually,” he adds.

To solve a business problem effectively, Wooledge advises organizations to use an iterative discovery process and combine different analytic techniques to solve business problems. “Discovery is an interactive process that requires rapid processing of large data volumes in a scalable manner. Once a user changes one or more parameters of the analysis and incorporates additional data using a discovery platform, they are able to see results in minutes versus waiting hours or days before they are able to do the next iteration.”

In addition, many big data problems require more than one analytic technique to be applied at the same time to produce the right insight to solve the problem most effectively. The ability to easily use different analytic techniques on data collected during the discovery process amplifies the effectiveness of the effort.

Cloudera’s Mudge shares that traditional approaches to modeling and transformation assume that a business understands what questions need to be answered by the data. However, if the value of the data is unknown, organizations need latitude within their modeling processes to easily change the questions in order to find the answers.

“Traditional approaches are often rigid in model definition and modification, so changes may take significant effort and time. Moreover, traditional approaches are often confined to a single computing framework, such as SQL, to discover and ask questions of the data. And in the era of big data, organizations require options for how they compute and interpret data and cannot be limited to one approach,” he notes.

After collecting data, an organization is sitting on a large quantity of new data that is unfamiliar in content and format. “For example, a company may have a large volume of social media comments coming in at high velocity; some of those comments are made by important customers. The question becomes how to disambiguate this data or match the incoming data with existing enterprise data. Of all the data collected, what is potentially relevant and useful?” asks Oracle’s Lumpkin.

Next, the company must try to understand how the new data interrelates to determine hidden relationships and help transform business. “A combination of social media comments, geographic location, past response to offers, buying history, and several other factors may predict what it needs. The team just has to figure out this winning combination,” shares Lumpkin.

Data governance and security are also important considerations. Does a company have the right to use the data it captures? Is the data sensitive or private? Does it have a suitable security policy to control and audit access?

Harnessing Big Data

To harness diverse data, outsmart the competition, and achieve the highest ROI, Wooledge advises organizations to include all types of data across customer interactions and transactions.

“Traditional approaches of buying hardware/software do not often work for big data because of scale, complexities, and costs,” adds XtremeData’s Desai. “Cloud-based, big data solutions help companies avoid up-front commitments and have infinite on-demand capacity.”

Paco Nathan, director of data science, Concurrent, Inc., adds, “Companies today recognize the importance of collecting data, aggregating into the cloud, and sharing with corporate IT for centralized analysis and decisions. The ecommerce sector, including Amazon, Apple, and Google, for example, have faired well with big data in terms of marketing funnel analytics, online advertising, customer recommendation systems, and anti-fraud classifiers.”

Another facet to consider is productivity since some of the biggest costs associated with big data are labor and lost opportunity. To mitigate this, Desai recommends that companies look for “load-and-go” solutions/services, meaning they do not require heavy engineering or optimization and can be deployed quickly for business use.

According to IBM’s Kobielus, a differentiated big data analytics approach is needed to keep up with the ever-changing requirements of a business. “Alternative, one-size-fits-all approaches of applying the same analytics to different challenges are flawed in that they do not address the fact that on any given day, a business may need to quickly understand consumer purchases, more effectively manage large financial data sets, and more seamlessly detect fraud in real time,” he says.

A comprehensive, big data platform strategy ensures reliable access, storage, and analysis of data regardless of how fast it is moving, what type it is, or where it resides.

Mudge recommends that data maintain its original, fine-grain format. “By storing data in full fidelity, future interpretation of the data is not constrained by earlier questions and decisions, and associated modeling and transformation efforts. By keeping data in its native format, organizations are free to repeatedly change its interpretation and examination of the original data according to the demands of the business,” he explains.

Cloudmeter’s Dickey points out the importance of having an analytics solution that processes and feeds data in real time. “Since today’s online business operates in real time, it is critical to be able to respond in a timely manner to trends and events that may impact an online business and the customer experience,” he says.

As Kobielus points out, petabytes of fast-moving, accurate data from a variety of sources have little value unless they provide actionable information. It is not just the fact that there is big data, it is what you do with it that counts.

Products to Help

Several key technologies are available to help organizations get a handle on big data and to extract meaningful value from it.

Cloudera – Cloudera’s Distribution Including Apache Hadoop (CDH) is a 100 percent open-source platform for big data for enterprises and organizations. It offers scalable storage, batch processing, interactive SQL, and interactive search, along with other enterprise-grade features such as continuous availability of data. CDH is a collection of Apache projects; Cloudera develops, maintains, and certifies this configuration of open-source projects to provide stability, coordination, ease of use, and support for Hadoop.

The company offers additional products for CDH, including Cloudera Standard—which combines CDH with Cloudera Manager for cluster management capabilities like automated deployment, centralized administration and monitoring, and diagnostic tools—and Cloudera Enterprise, a comprehensive maintenance and support subscription offering.

Cloudera also offers a number of computing frameworks and system tools to accelerate and govern work on Hadoop. Cloudera Navigator provides a centralized data management application for data auditing and access management, and extends governance, risk, and compliance policies to data in Hadoop. Cloudera Impala is a low-latency, SQL query engine that runs natively in Hadoop and operates on shared, open-standard formats. Cloudera Search, which is powered by Apache Solr, is a natural language, full-text, interactive search engine for Hadoop and comes with several scalable indexing options.

Cloudmeter – Cloudmeter captures and processes data available in companies’ network traffic to provide marketing and IT with complete insight into their users’ experiences and behavior. Cloudmeter works with companies whose Web presence is critical to their business, including Netflix, SAP, Saks Fifth Avenue, and Skinit.

Cloudmeter Stream and Cloudmeter Insight use ultralight agents that passively mine big data streams generated by Web apps, enabling customers to gain real-time access into the wealth of business and IT information available without connecting to a physical network infrastructure.

Now generally available, Cloudmeter Stream can be used with other big data tools, such as Splunk, GoodData, and Hadoop. Currently in private beta, Cloudmeter Insight is the first software as a service application performance management product to include visual session replay capabilities so users can see the individual visitor sessions and the technical information behind them.

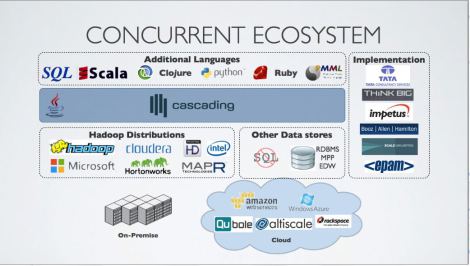

Concurrent, Inc. – Concurrent, Inc. is a big data company that builds application infrastructure products designed to help enterprises create, deploy, run, and manage data processing applications at scale on Hadoop.

Cascading is used by companies like Twitter, eBay, The Climate Corporation, and Etsy to streamline data processing, data filtering, and workflow optimization for large volumes of unstructured and semi-structured data. By leveraging the cascading framework, enterprises can apply Java, SQL, and predictive modeling investments—and combine the respective outputs of multiple departments into a single application on Hadoop.

Most recently, Concurrent announced Pattern—a standards-based scoring engine that enables analysts and data scientists to quickly deploy machine-learning applications on Hadoop—and Lingual, a project that enables fast and simple big data application development on Hadoop. All tools are open source.

IBM – IBM’s big data portfolio of products includes InfoSphere BigInsights—an enterprise-ready, Hadoop-based solution for managing and analyzing large volumes of structured and unstructured data.

According to IBM, InfoSphere Streams enables continuous analysis of massive volumes of streaming data with submillisecond response times. InfoSphere Data Explorer is discovery and navigation software that provides real-time access and fusion of big data with rich and varied data from enterprise applications for greater insight and ROI. IBM PureData for Analytics simplifies and optimizes performance of data services for analytic applications, enabling complex algorithms to run in minutes.

Finally, DB2 with BLU Acceleration provides fast analytics to help organizations understand and tackle large amounts of big data. BLU provides in-memory technologies, parallel vector processing, actionable compression, and data skipping, which speeds up analytics for the business user.

LexisNexis – High-performing computing cluster (HPCC) Systems from LexisNexis is an open-source big data processing platform designed to solve big data problems for the enterprise. HPCC Systems provides HPCC technology with a single architecture and a consistent data-centric programming language. HPCC Systems offers a Community Edition, which includes free platform software with community support, and an Enterprise Edition, which includes platform software with enterprise-class support.

Oracle – Oracle’s big data solutions are grouped into three main categories—big data analytics, data management, and infrastructure. Big data analytics are covered by Oracle Endeca Information Discovery—a data discovery platform that enables visualization and exploration of information, and uncovers hidden relationships between data, whether it be new, unstructured, or trusted data kept in data warehouses.

Oracle Database, including Oracle Database 12c, contains a number of in-database analytics options. Oracle Business Intelligence Foundation Suite provides comprehensive capabilities for business intelligence, including enterprise reporting, dashboards, ad hoc analysis, multidimensional online analytical processing, score cards, and predictive analytics on an integrated platform. Oracle Real-Time Decisions automates decision management and includes business rules and self-learning to improve offer acceptance over time.

Oracle’s data management tools are used to acquire and organize big data across heterogeneous systems. Oracle NoSQL Database is a scalable key/value database, providing atomicity consistency isolation durability transactions and predictable latency. MySQL and MySQL Cluster are widely used for large-scale Web applications. Oracle Database is a foundation for successful online transaction processing and data warehouse implementations. Oracle Big Data Connectors link Oracle Database and Hadoop. Oracle Data Integrator is used to design and implement extract, transform, and load processes that move and integrate new data and existing enterprise data. Oracle distributes and supports Cloudera’s distribution, including Hadoop.

Lastly, for big data infrastructure, Oracle says its Engineered Systems ship pre-integrated to reduce the cost and complexity of IT infrastructures while increasing the productivity and performance of a data center to better manage big data. Oracle Big Data Appliance is a platform for Hadoop to acquire and organize big data.

SAS – SAS offers information management, which provides strategies and solutions that enable big data to be managed and used effectively through high-performance and visual analytics, and flexible deployment options.

Flexible deployment models bring choice. High-performance analytics from SAS are able to analyze billions of variables, and these solutions can be deployed in the cloud with SAS or another provider, on a dedicated high-performance analytics appliance, or within existing IT infrastructure—whichever best suits an organization’s requirements.

Teradata – Within the Unified Data Architecture, Teradata integrates data and technology to maximize the value of all data, providing new insights to thousands of users and applications across the enterprise.

According to Teradata, its Aster Discovery Platform—which includes the Teradata Aster Database and Teradata Aster Discovery Portfolio—offers a single unified platform that enables organizations to access, join, and analyze multistructured data from a variety of sources from a single SQL interface. The Visual SQL-MapReduce functions and out-of-the box functionality for integrated data acquisition, data preparation, analysis, and visualization in a single SQL statement generate business insights from big, diverse data.

The recently announced Teradata Portfolio for Hadoop provides open, flexible, and comprehensive options to deploy and manage Hadoop.

XtremeData – XtremeData is a petabyte-scale SQL data warehouse for big data analytics on private and public clouds. XtremeData’s “load-and-go” structure means that users implement schema, load data, and start running queries using industry standard SQL tools. XtremeData allows users to freely run queries against multiple large tables and continuously add new data sets without the need for performance engineering.

XtremeData is designed for big data applications that need continuous, real-time ingests and interactive analytics with high availability, such as digital/mobile advertising, gaming, social, Web, networking, telecom, and cybersecurity.

More Data, More Success

While the sheer volume and variety of big data available today presents a challenge for organizations to capture, manage, and analyze, it also holds the key to business growth and success. “Leveraging and analyzing big data enables organizations and industries as a whole to generate powerful, disruptive, even radical transformations,” explains IBM’s Kobielus.

Organizations with access to large data collections strive to harness the most relevant data and use it for optimized decision-making. Big data technologies not only support the ability to collect large amounts of data, but they also provide the ability to understand it and take advantage of its value for better ROI. The more information an organization is able to collect and analyze successfully, the more it has the ability to change, adapt, and succeed long term.

Nov2013, Software Magazine